Sentient Machines: One computer’s dream to compose music

A philosophical exploration of the creative ability of computers.

Upon learning about my job in data science, a Bumble date asked “so, will AI take over the world?”, and I struggled to stop my eyes from rolling. And yet, here I am, about to wade into the controversial topic of sentient machines. All I ask is for forgiveness.

Computational creativity

What do you think of when you hear the term “computational creativity”? When I first heard about a class titled just that, I thought we’d be learning about Generative AI, creating our own Gen AI models, and exploring ways in which we could use computers for our own creative pursuits. All that was true, but what I didn’t realize was that we’d also be in for plenty of philosophical debates, a sprinkle of existential crisis, and a fair amount of examining our professor’s Twitter wars.

Research in computational creativity [1] is defined as:

The philosophy, science, and engineering of computational systems which, by taking on particular responsibilities, exhibit behaviours that unbiased observers would deem to be creative.

It proposes the idea that AI systems can be more than just mere tools to enhance human creativity. Instead, machines could exhibit autonomous creativity without human intervention. While this might sound like science fiction, with the current growth of generative AI, machines are perhaps closer than ever to being independent of humans.

What makes a computer creative?

Creativity is often discussed as a human quality, and humans have produced artefacts of creativity (e.g. poems, paintings, music, dance, etc.) since the beginning of our time. Despite all that, it is also one of the most difficult concepts to define. Still, we can agree on some attributes of creativity:

Innovation: a new or novel process. E.g. using a new tool to make music

Imaginative: a new or surprising product. E.g. creating a new genre of music

Accountability: being able to explain the process. E.g. explaining how the music was created

Intentionality: having a reason to create or do something. E.g. music created after being inspired by something.

Reflection: being able to understand what is produced and how it is perceived. E.g. articulating the effect of the composed music on listeners.

While these attributes are not exhaustive, a program that exhibits some of these traits could arguably bring us one step closer to considering it “creative”.

Just recently, a portrait painted by a humanoid robot sold for US$1.32 million, once again sparking debate about whether it really “challenges what it means to be human”, or if it is only a “very sophisticated, dressed-up version of those periodic news stories about farmyard animals that can supposedly paint like Pablo Picasso”.

The robot, Ai-Da, exhibits many attributes of creativity during her creative process. Before painting, she “discusses with its creators the things it would like to paint”, including “style, content, tone and texture”. Yet even with her seemingly high level of cognition, many of us would hesitate to claim that she was truly being creative.

Do we need to define creativity to make progress? Impressionism did not become widely accepted because it was well-defined - all it took was a shift in the popular perspective. In the same way, perhaps Ai-Da and other creative machines do not need to have explicit definitions of why and how they are creative. Instead, maybe there will be a gradual acceptance of machines as creative beings. At least, that is the vision of Computational Creativity.

Weak vs Strong Computational Creativity

Even without a solid definition of creativity, computational creativity projects can still differ in different levels of creativity. For simplicity, they can be defined as either weak or strong projects:

Weak projects are more concerned with aesthetics and the value of its output. (E.g. how moving an orchestral piece is, how coherent the different instruments are, or how many styles of music it can produce.)

Strong projects focus on having features that people would perceive as exhibiting creativity, where machines lead the creative process. (E.g. the music might not be the most coherent, but it could be very original, and shows some kind of imagination in how it was created.)

Could I, a mortal being, create a machine capable of being somewhat autonomously creative? Half driven by curiosity and half driven by the need to build a strong project for my grades, I attempted to create a creative machine.

The next section describes how I came up with the system.

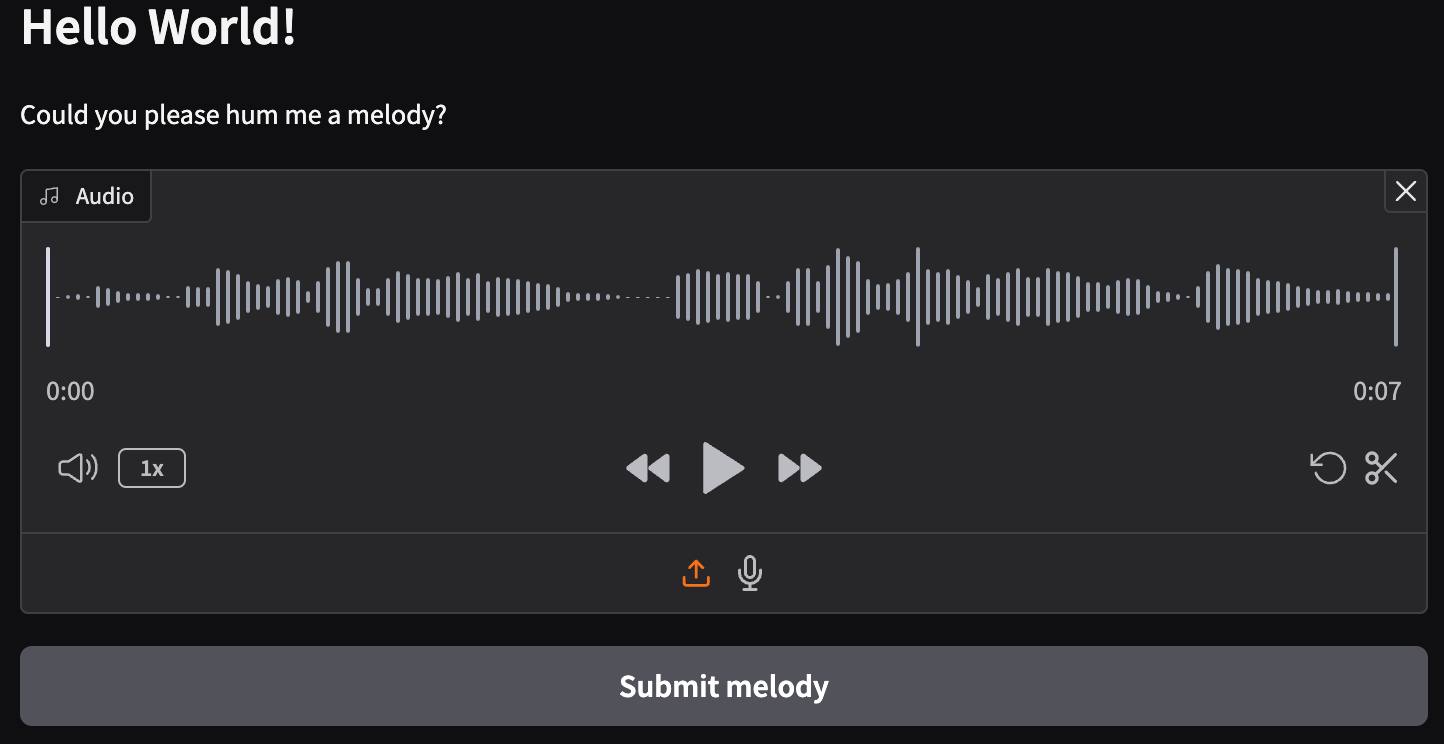

Hello World, could you please hum me a melody?

“Could you please hum me a melody?”, requests composer Eunike Tanzil to seemingly unsuspecting passers-by, before going on to compose orchestral music from the theme of the given melody. She then invites her followers to discuss what the music reminds them of in the comments section.

Having watched a whole series of her ‘Hum me a Melody’ short videos, I’ve often wondered what she would create out of random melodies I could think of. More ambitiously, I’ve also wondered what music I would create given the same melodies. While I unfortunately lack the talent and skills to compose my own epic orchestral music from scratch, hopefully I have some skills to program an AI system that could do that.

Inspired by Tanzil’s videos, this project takes a hummed melody, and creates a short music video.

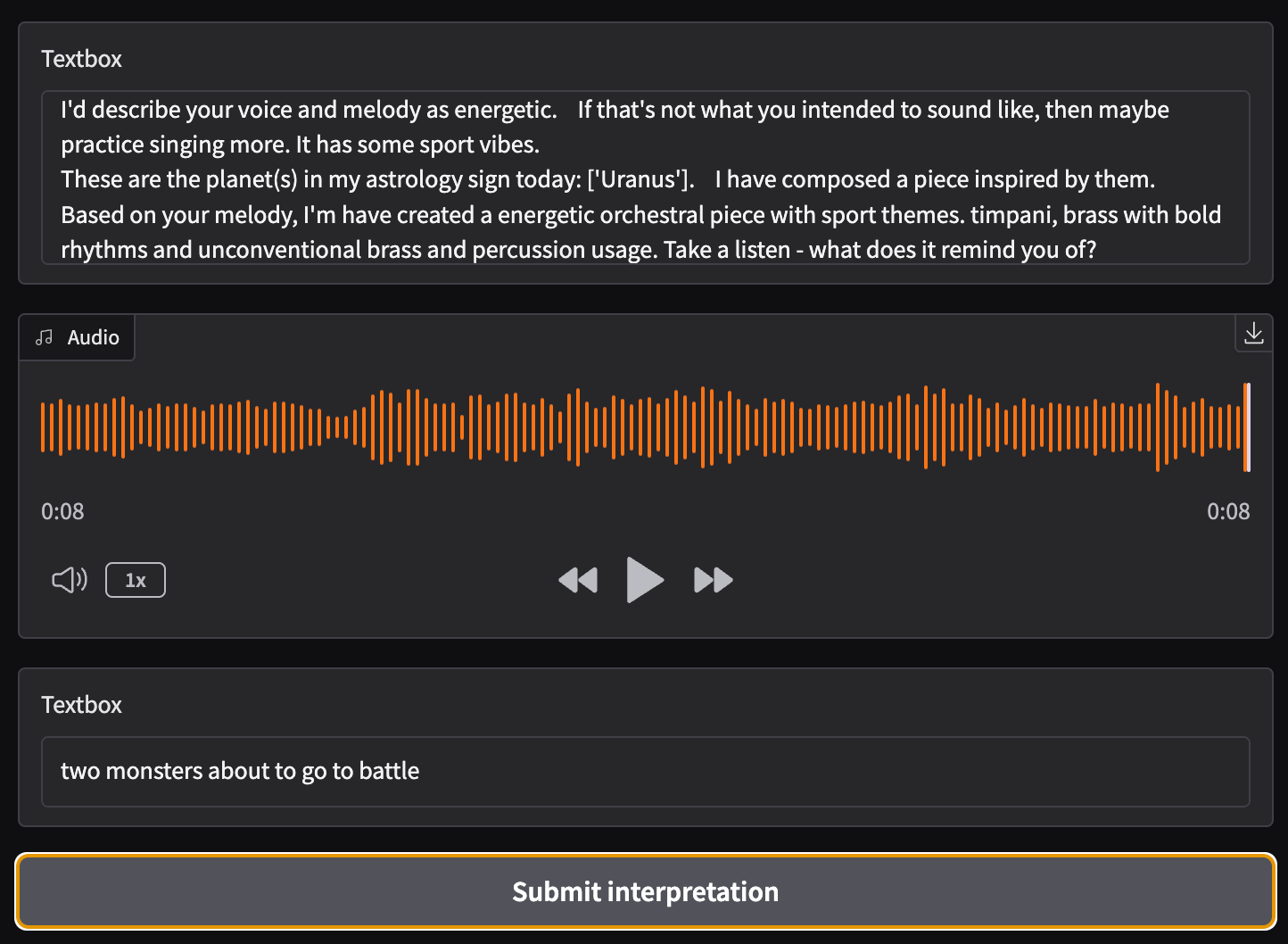

First, it prompts the user for a melody, and infers the mood of the audio recording.

Then, it takes inspiration from the day’s planet positions (yes, we’re talking about actual live positions of astrological planets), and decides which instruments best reflect the theme of the day based on the corresponding piece in Holst’s Planets. A song is then generated using a text prompt describing the mood and the day’s theme, and conditioned on the hummed melody.

At this point, the audience is invited to listen to the generated music and to describe what the song reminds them of.

Finally, a video encapsulating their thoughts is generated and the music is layered onto the video to create a music video.

Sample music video output:

A song inspired by Mercury (“flute, clarinet with light and nimble melodies”) and Venus (“french horn with warm, mellow tones”), in a “dark, energetic, happy, powerful” mood that reminded me of “dancing by the river in summer”.

lmfao. If you want music videos for yourself, our resident artist lives here:

(warning: it runs really slow - creating art takes time, and we’re GPU-poor T.T)

Behind the scenes

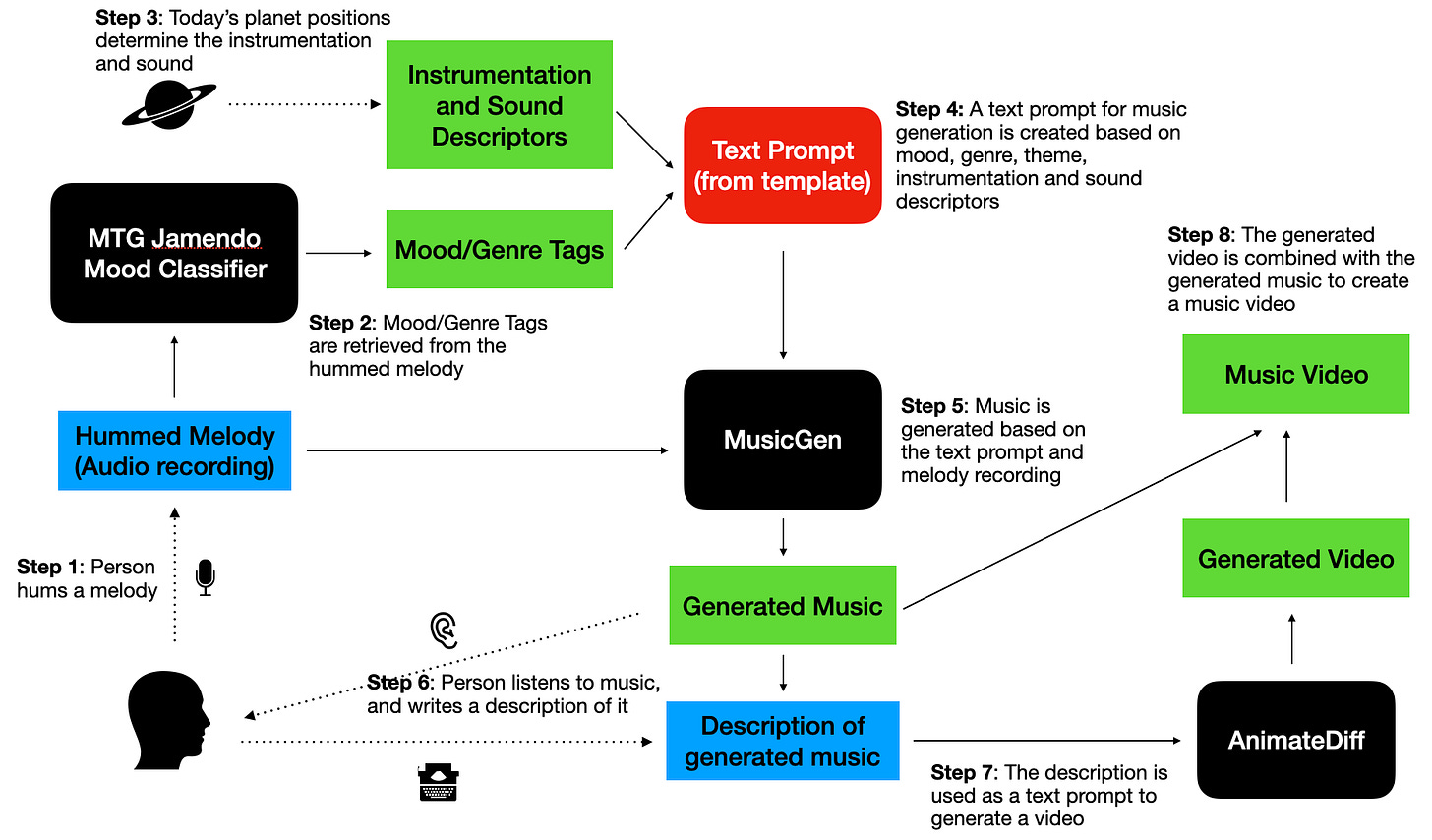

Under this seemingly simple UI are a bunch of models that are used to infer the mood and genre of the melody, and to generate both the music and video outputs. They are not exactly important to the point of the article, but if you must know:

MTG Jamendo Mood Classifier [3]: Trained on an in-house dataset, this model by the Music Technology Group (MTG) at the Universitat Pompeu Fabra (UPF) predicts 56 types of moods and genres on audio files.

MusicGen [4]: Trained on 20,000 hours of licensed music, this model by Meta’s FAIR team generates music based on a text prompt, conditioned on an input audio.

AnimateDiff [5]: Trained on WebVid-10M [6], a dataset of stock videos, this model by ByteDance generates videos based on a text prompt, using epiCRealism [7] as its text-to-image base model.

ChatGPT: Used to categorize mood/genres from the Mood Classifier into categories, and to generate descriptions of each piece in Holst’s Planets.

The full process of how these models are pieced together is illustrated here:

Check it out on: GitHub | Colab | HuggingFace Spaces

Give FACE, please: Evaluating creativity

In this project, the machine’s creativity is reflected in its use of astrology as a source of inspiration. Since both the sound descriptors and the classification of moods into categories were largely generated by ChatGPT, perhaps we can argue that they were original ‘ideas’ and ‘opinions’ from generative AI.

To formally evaluate the creativity of the system, we used the FACE Model [2], an evaluation framework pioneered by our professor (yes, I was trying to give him face, ha ha). Our system fulfils all four components for generative acts:

E: An expression of a concept. Our system aims to create a music video inspired by a user-sung melody and astrology.

C: A concept. Since text prompts are how we control both models, the concept in our system is defined by the text prompt templates that are used to construct inputs for generating both the music and video.

A: An aesthetic measure. On a high level, the music generated is always an Orchestral piece, since it was inspired by Tanzil’s orchestral compositions. At a more granular scale, the generated music has a target theme based on what the system has heard, as well as on natural factors like planet positions in Astrology.

F: An item of framing information. Our system provides context about its work through text, describing what it has inferred from their input, and its sources of inspiration.

At FACE value (ha ha ha), our project might be considered creative, but does it really show autonomous creativity of the machine?

So… will AI take over the (creative) world?

I’ve explored whether machines can exhibit creativity by programming a system that generates music videos inspired by user melodies and astrology. I’ve tried to build my personal definition of creativity into the system: To me, creativity involves owning your own ideas, and producing something that embodies the ideas. It is not a pursuit of perfection, but a form of expression.

While the system showed elements of independent expression, like interpreting moods and making stylistic choices, it ultimately reflects my own design choices. Honestly, it just feels like I have pretended to be a program - all the ideas were mine, I put everything together in the form of code, and then tried to pass off as a computer. As its human creator, I have programmed the system so that it behaves that way, and therefore it is my creativity that is presented and not the machine’s.

Arguably, we could also think of it as a user-machine interaction. From that perspective, the programmer is absent, and we’re left with just a machine obsessed with astrology, huge fan of Holst, composing songs with videos for a user.

I’m hardly convinced that I’ve created a machine that owns its creative process. This raises the question: What would it take to be convinced? My brain hurts from trying to answer that, I’ll just wait for that paradigm shift. One thing’s for sure - human me will never produce music like Eunike Tanzil.

More Food for Thought

If I’d started the project by asking generative AI to come up with the project idea, would that qualify as a machine’s creativity and ideas (and I, merely its human minion executing its ideas)?

In some tests, the system generated videos of nude women even though it was not mentioned in the prompt. Who should be responsible for content safety of the system? A: Me, the programmer, B: ByteDance, the text-to-video generative model creators, or C: The Computer?

If today’s computers are not yet creative agents, what do they need to be capable of to show that they are autonomously creative?

Additional Resources

Computational Creativity

Artist in the Machine, a website and book by Arthur I. Miller, explores ideas around creative machines. Great resource to check out other artist/research projects.

Association for Computational Creativity curates a collection of research papers, journals, and other resources for both the philosophical and technical exploration of computational creativity.

Generative Models

JASCO, Meta’s recent advancement in text-to-music generation, an more customizable model compared to MusicGen. Capable of taking in chord or beats as input. (Not open sourced yet).

VideoCrafter2, by TenCent for text-to-video generation, which claims to be better in “visual quality, motion, and concept composition”.

Fanclub

Watch Laufey and Eunike Tanzil produce a song in 3 Hours

Acknowledgements

Many thanks to Prof. Simon Colton and his very comprehensive slides for Computational Creativity (ECS7022P) :) And special thanks to The May Babies for sharing about Ai-Da and lots of thought-provoking content - your enthusiasm motivated me to finish this post way before others.

References

[1] Colton, S., & Wiggins, G. A. (2012). Computational creativity: The final frontier?. In ECAI 2012 (pp. 21-26). IOS Press.

[2] Colton, S., Charnley, J. W., & Pease, A. (2011, April). Computational Creativity Theory: The FACE and IDEA Descriptive Models. In ICCC (pp. 90-95).

[3] MTG -Jamendo mood and theme model metadata. https://essentia.upf.edu/models/classification-heads/mtg_jamendo_moodtheme/mtg_jamendo_moodtheme-discogs-effnet-1.json

[4] Copet, J., Kreuk, F., Gat, I., Remez, T., Kant, D., Synnaeve, G., ... & Défossez, A. (2024). Simple and controllable music generation. Advances in Neural Information Processing Systems, 36.

[5] Lin, S., & Yang, X. (2024). AnimateDiff-Lightning: Cross-Model Diffusion Distillation. arXiv preprint arXiv:2403.12706

[6] Bain, M., Nagrani, A., Varol, G., & Zisserman, A. (2021). Frozen in time: A joint video and image encoder for end-to-end retrieval. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 1728-1738).

[7] epiCRealism. https://civitai.com/models/25694